Serverless Integration design pattern on Azure to handle millions of transactions per second

Many times on integration projects client come up with a very specific requirement that,

- It should support millions of transactions per second.

- Based on demand/number of requests it should auto-scale.

- A client doesn’t want to procure huge hardware up-front.

- It should support multiple applications integration & open for future integrations without any or minimum changes.

- If an application is down for some time, a framework should have in-build retry logic after some configured schedule.

To help fulfill the requirements We have to analyze below options for this integration framework-

1. Biztalk Server

2. Azure Function, Logic App & Event Hub

3. Third-party integration server

Every option has its own pros & cons. Based on client requirement analysis with each option we found option 2, Azure Function and Event Hub approach most suitable for this type of integration.

Below are the main features which played a key role to select this framework for integration-

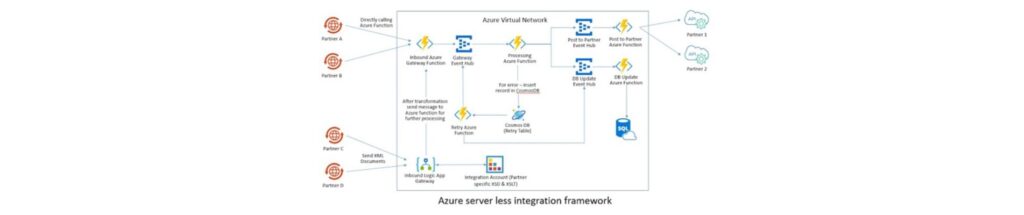

Let’s discuss on processing & data flow in this design pattern.

A partner can send data in two ways-

1. Post XML files to the logic app

2. Post data directly to Azure function

A partner who has existing XML formatted files & does not want to make any change in the existing application & are not able to send data to Azure function they can send XML files to the logic app. Below is the process in the logic app-

1. Client post XML files with other fields for client id and token

2. Azure SQL contains a list of clients, client id, token, supported integration details e.g. input schema, XSLT name and target schema name.

3. Based on the configuration done for a particular client and message type, the logic app picks schema, XSLT from integration account and after processing send message to azure function to further processing.

- Based on message type first validates input message.

2. After successful data validation sends a message to a process event hub.

3. Another azure function picks the message and does the processing based on business logic. After business processing, it collects all data and sends to another event hub for DB update.

4. Another azure function picks the message from DB update event hub and saves data into Cosmos DB, Azure SQL, etc.

5. We have one more event hub which is used to post data to another application in an asynchronous way. Whenever we need to post data to another application we are sending message to a posttopartner event hub.

6. Azure function picks the message from posttopartner and sends to partners endpoint with security token etc.

- Every azure function which works on the event have all code within a try-catch block.

2. Inside catch block, it validates error code. It this is related to any partner system connectivity than it inserts a record into cosmos DB in separate retry table.

3. Another azure function runs after some duration and checks if any unprocessed record available in retry table than it pickup those records and post to a respective event hub.

4. From event hub respective azure function pickup the failed message and start the processing.

5. Inside every business logic, it first checks if the same message is not processed than only process it.

we hope that this blog gives enough insights to understand how to achieve high-performance integrations which can handle millions of requests per second with on-demand scaling and serverless technology. Do you have a similar request, drop us a line at [email protected]